Finding the right approach for your edge deployment

When building out an edge computing strategy, one of the biggest questions is where the data should go. Should everything be routed through a single server? Or should the processing happen on-site, closer to where the data is created?

The answer depends on your environment. Centralized computing can work well in stable, controlled settings. But when you’re dealing with real-time decisions, multiple locations, or limited connectivity, a distributed model often performs better.

We build edge hardware to support both scenarios. Whether you're centralizing data for streamlined operations or distributing it across smart devices in the field, there’s a setup that fits. Understanding how these models differ, and when each one makes sense, is the first step to making your edge environment more efficient, scalable, and future-ready.

What is a distributed computing model?

Distributed computing spreads the workload across multiple devices or nodes, rather than relying on one central system. Each node has its own processing power and storage, allowing it to run tasks independently while still communicating with the rest of the network.

This setup brings a few key benefits:

- It reduces latency, because data can be processed right where it's generated.

- It also increases system reliability, if one device fails, the others keep working.

- It scales easily, you can add more nodes as your system grows, without overhauling your infrastructure.

A great example is a network of cameras in a smart city. Instead of sending all video footage to a central server, each camera can run video analytics locally. That saves bandwidth and gives operators faster access to insights like identifying congestion or spotting safety issues in real time.

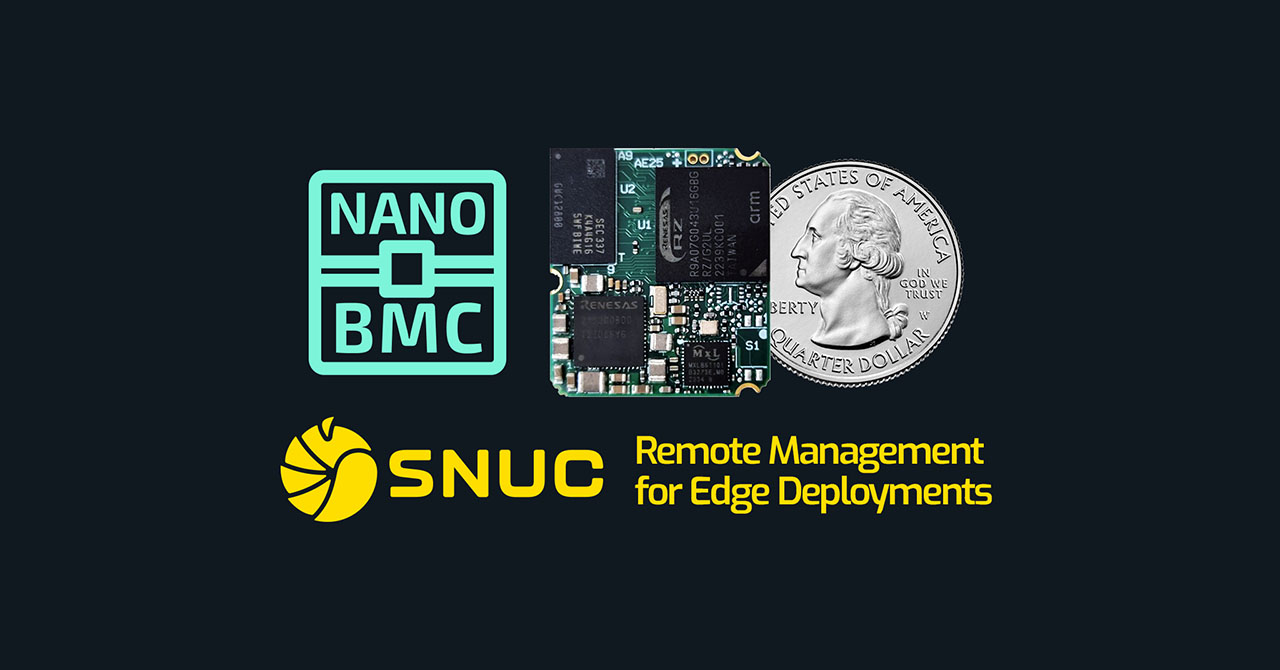

Devices like Simply NUC’s extremeEDGE Servers™ are built for exactly this kind of setup. They're compact, energy-efficient, and rugged enough for remote or outdoor environments. And with remote management tools included, you can keep tabs on every node without being on-site.

When centralized computing still makes sense

Distributed systems are powerful, but centralized computing still plays a valuable role, especially when your environment is stable, connectivity is strong, and most of the processing can be handled in one place.

In a centralized computing model, a single server takes on the heavy lifting. Client devices send data to the server, which processes it and sends back instructions or results. This setup is often used in office networks, internal applications, or any situation where a controlled hub can manage the workload efficiently.

Centralized systems are typically easier to maintain. With one core location to manage software updates, security protocols, and backups, your IT team spends less time coordinating across multiple devices. This can be a smart choice when the focus is on simplicity and predictability.

Simply NUC offers several compact, high-performance options that work well with centralized environments. The Mill Canyon NUC 14 Essential, for instance, is ideal for applications like retail hubs, streaming setups, and collaboration spaces. It’s a cost-effective system that delivers solid compute power and support for up to three displays, all in a small form factor that’s easy to install and manage.

For more performance-intensive tasks, the NUC 15 Pro (Cyber Canyon) offers faster processing, enhanced graphics, and broad OS compatibility. Ideal for hosting digital signage software, managing connected point-of-sale terminals, or overseeing employee workstations, these devices give you central control with enough flexibility to scale.

Centralized computing works best when your data flow is predictable and your network is reliable. With the right hardware in place, you get the performance and stability needed to keep everything running smoothly.

Comparing architectures: Centralized vs distributed for edge

Choosing between centralized and distributed computing comes down to understanding what your system needs to do, where it needs to do it, and how quickly it needs to respond.

Centralized architecture:

- One core server handles all data processing

- Easier to maintain and update from a single location

- Lower hardware cost at the edge, since endpoints rely on the central server

- Best suited for office environments, internal systems, or any application with strong, consistent network access

Distributed architecture:

- Multiple nodes process data independently, closer to the data source

- Reduces latency and enables real-time decisions on site

- More resilient to outages or local failures

- Scales more easily across multiple locations or regions

For edge computing, distributed systems often provide better flexibility, especially when you're dealing with real-time intelligence, limited connectivity, or remote management challenges.

For example, a network of smart kiosks or manufacturing sensors can’t afford to pause every time there's a delay reaching the main server. They need to respond instantly, and that’s where processing data locally really shines.

That said, many businesses find a middle ground with a hybrid edge strategy. You might centralize certain tasks, like long-term storage or analytics dashboards, while distributing the processing of time-sensitive tasks to devices in the field.

How Simply NUC supports both models

Every edge strategy is different. Some businesses need the simplicity of centralized control. Others rely on local decision-making across multiple sites. And many fall somewhere in between. That’s why Simply NUC designs systems that can support both approaches, so you’re not locked into one way of working.

If your project calls for distributed computing, devices like the extremeEDGE Servers™ are purpose-built for the job. They deliver robust performance at the data source, whether that’s a warehouse floor, roadside cabinet, or field unit in a remote location. With fanless designs and extended temperature tolerance, they hold up in demanding environments. And with built-in remote management features, you can deploy and support them without needing a technician on-site.

For more centralized setups, where processing is handled in one location and edge devices act as terminals or data collectors, we offer compact systems like Mill Canyon and Cyber Canyon. These platforms are ideal for retail spaces, signage networks, or collaboration hubs. You still get plenty of computing power, flexible storage options, and support for modern operating systems, but in a form factor that’s easy to install, manage, and scale.

We also know that many businesses want to blend both models. That’s why Simply NUC devices are configurable. Whether you need extra I/O, custom OS images, or specialized mounting options, we can tailor each system to match your infrastructure and workload.

Useful Resources:

Edge computing in manufacturing